Research

Research Interests: Brain-Machine Interfaces, Theoretical Neuroscience, & Artificial Intelligence

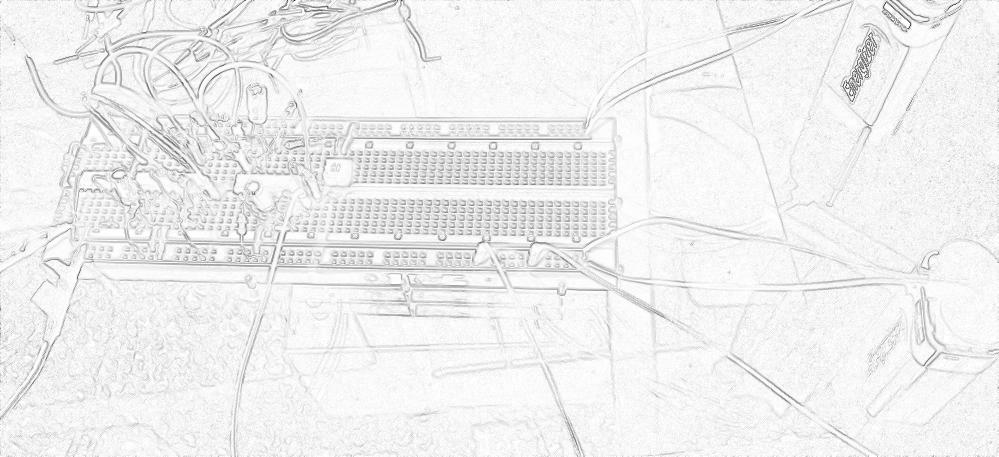

My interest in a more academic career path began after taking a discrete math class and a neuroscience class in high school. The discrete math class exposed me to an entirely new way to look at mathematics, through the lens of proofs. The neuroscience class introduced me to the idea of brain-machine interfaces, which I read more about in a brilliant book by Miguel Nicolelis called Beyond Boundaries. That summer I worked with my friend to build my first BMI (without any prior EE knowledge), which was a simple EEG-based device that is shown above.

As an undergraduate student, I did my research at the Redwood Center for Theoretical Neuroscience. I mainly worked on two projects during my time there. The first project involved assisting with computational modeling for the Yartsev lab. This focused on experimenting with different supervised learning methods to distinguish bat vocalizations from noise, as well as exploring unsupervised methods to understand any structure in the vocalizations. The second project centered around hierarchical reinforcement learning for graph-based representations of MuJoCo environments.

As a graduate student, I continued my research at the Redwood Center and concentrated on two projects. The first project explored hierarchical reinforcement learning in the state-space domain with an interest in whether or not the hierarchical states could model the place cells in hippocampal replay events. The second project investigated the application of hyperdimensional computing to natural language processing with a particular focus on its capabilities when used in parallel architectures and its performance relative to similar models.

Currently my research at Lawrence Livermore National Lab focuses on two areas. The first is the computational / ML side of brain-machine interfaces. The second is various kinds of ML for protecting critical infrastructure. I’m always looking to connect with researchers in similar fields, so feel free to reach out if anything piques your interest!